Key Takeaways

- Gemini is Google’s new “multimodal” generative AI model that can process text, images, videos, and sound, producing outputs in multiple formats.

- Gemini outperforms humans and OpenAI’s GPT-4 on language understanding benchmarks.

- You can try Gemini through Google’s Search Generative Experience, the NotebookLM app, and other services.

We seem to be in full swing of the second age where anything that is popular technology has to have artificial intelligence in it. Nary a decade prior, bits of machine learning made their way into little tricks like identifying subjects in a camera’s vision or creating sentences that may or may not actually be useful. Now, as we approach a peak of generative AI (with more of them perhaps on the way), Google has upped the stakes with its new “multimodal” model called Gemini.

If you’re curious about what makes Gemini tick, why it’s so different from the likes of OpenAI’s ChatGPT, and how you might get to experience it at work, we’re here to give you the lay of the land.

Google launches Gemini AI, its answer to GPT-4, and you can try it now

Gemini AI is here to take on GPT-4, with support for multiple forms of data input, like text, images, video, and audio. And you can try it now.

What is Gemini and how does it work?

Google debuted Gemini on Dec. 6, 2023, as its latest all-purpose “multimodal” generative AI model. It came out in three sizes: Ultra (which was held back from commercial use until February 2024), Pro, and Nano.

Up to this point, widely available large language models or LLMs worked by analyzing input media in order to expand upon the subject into a desired media format. For example, OpenAI’s Generative Pre-trained Transformer model or GPT deals in text-to-text exchanges while DALL-E translates text prompts into images. Each LLM would be tuned for one type of input and one type of output.

Multimodal

This is where all this talk of multimodality comes in: Gemini can take in text (including code), images, videos, and sound and, with some prompting, put out something new in any of those formats. In other words, one multimodal LLM can theoretically do the jobs of several dedicated single-purpose LLMs.

This sizzle reel gives you a good idea of just how polished interactions with a decently-equipped model are. Don’t let the video and its slick editing fool you, though, as none of these interactions are happening as quickly as you see them being performed here. You can learn about the meticulous process Google went through to engineer its prompts in a Google for Developers blog post.

That said, you do get a sense of the level of detail and reasoning Gemini is able to carry out into whatever it’s tasked with doing. I was personally most impressed with Gemini being able to see an untraced connect-the-dots picture and then correctly determine it to be of a crab (4:20). Gemini was also asked to create an emoji-based game where it would receive and judge answers based on where a user pointed to on a map (2:05).

What can you do with Gemini?

You don’t typically come up to an LLM and ask it to write Shakespeare for you and it’s the same for Gemini. Instead, you’ll find it at work on a variety of surfaces. In this case, Google says it has been using Gemini to power its Search Generative Experience as well as the experimental NotebookLM app.

At Gemini’s launch in December 2023, the company took its existing Bard generative chatbot — formatted similarly to ChatGPT — which was running off an iteration of its older Pathways Language Model (PaLM), and switched it onto Gemini Pro. In February 2024, Google announced it would go a step further by rebranding Bard as Gemini and introducing a paid service tier called Gemini Advanced. The move also brought about a dedicated Android app and a carve-out in the Google app for iOS in addition to the open web client. It’s available to use in more than 170 countries and regions, but only in US English. You can learn more about this particular experience in our dedicated explainer of Gemini, the chatbot.

Google Bard: How the ChatGPT alternative works

Like ChatGPT, Bard can be used for a range of purposes, including generating writing drafts, brainstorming ideas, and chatting about general topics.

Bard wasn’t the only thing that got renamed. Google Workspace and Google Cloud customers exposed to generative AI features under the Duet moniker will now see Gemini take its place. It will still help users write up documents or diagnosis code branches depending on the situation, but the look will be a tad different.

Android users can also experience some enhanced features with Gemini Nano, which is meant to be loaded directly onto devices. Pixel 8 Pro owners got first crack, but other Android 14 devices will be able to take advantage of Nano down the line. Third-party app developers were able to take Gemini for a spin in Google AI Studio and Google Cloud Vertex AI.

How does Gemini compare to OpenAI’s GPT-4?

OpenAI beat Google to the punch with the launch of the nominally multimodal GPT-4 with GPT-4V (the ‘V’ is for vision) back in March 2023, updating it again with GPT-4 Turbo in November. GPT remains conservative in its approach as a text-focused transformer, but it does now accept images as input.

Performance

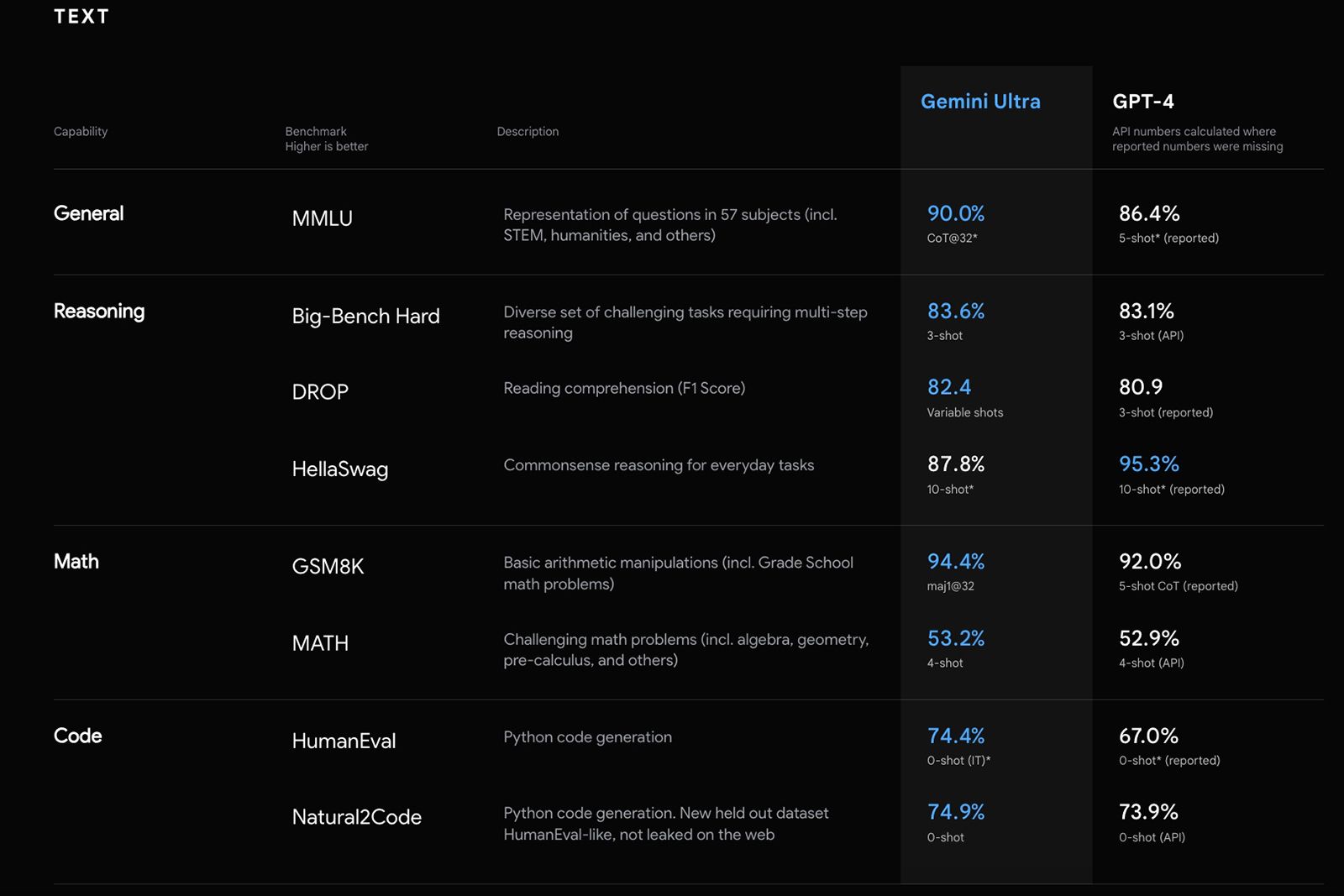

Benchmarks are far from the end-all be-all factor when judging the performance of LLMs, but numbers in charts are what researchers kinda live for, so we’ll humor them for a little bit.

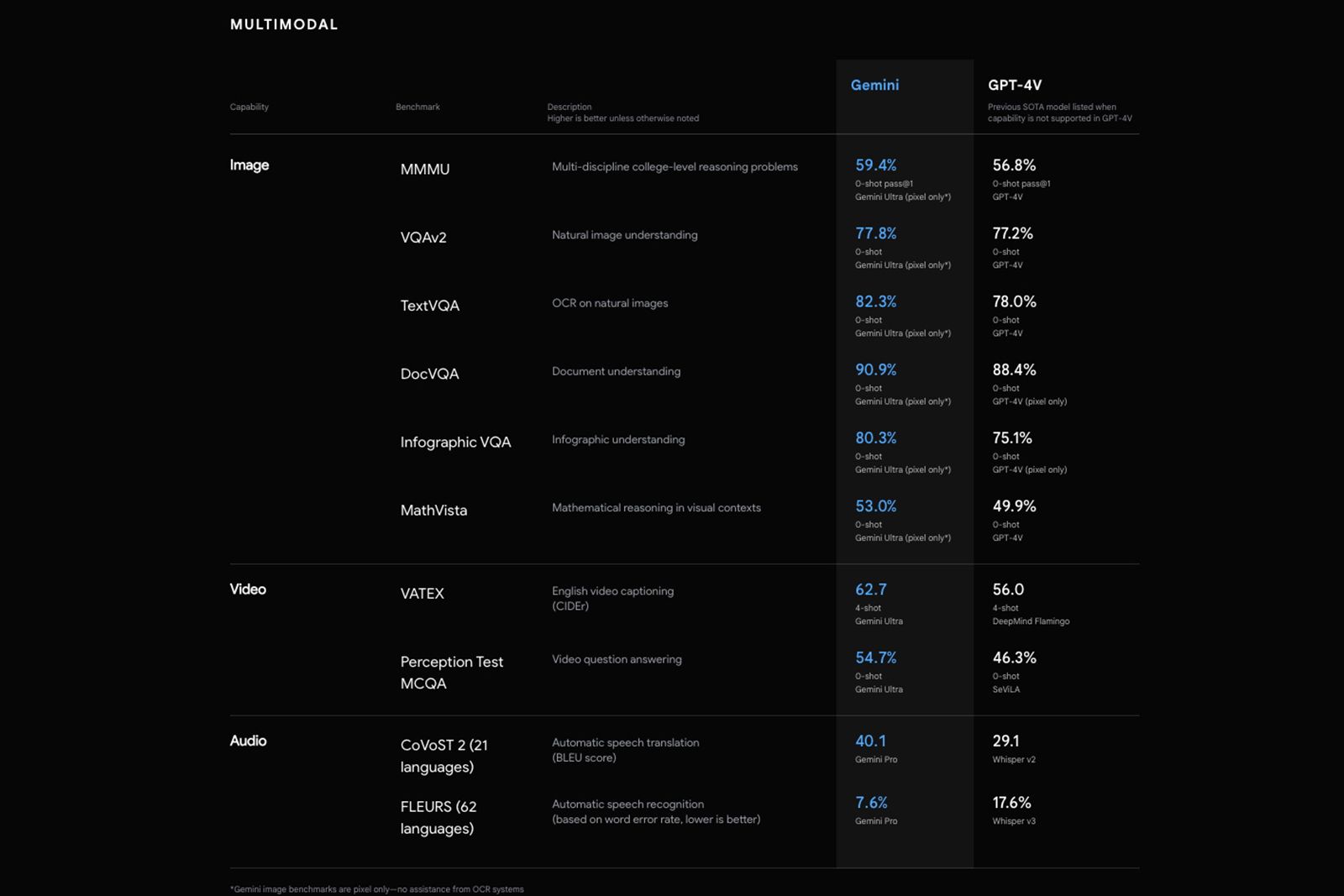

Google’s DeepMind research division claims in a technical report (PDF) Gemini Ultra is the first model to outdo humans on the Massive Multitask Language Understanding (MMLU) benchmark with a score of 90.04% versus the top human expert score of 89.8% and GPT-4’s reported 86.4%. Gemini Ultra also has GPT-4 beat on Massive Multi-discipline Multimodal Understanding (MMMU) benchmark by a score of 59.4% to 56.8%. The smaller Gemini Pro’s best showings stand at 79.13% for MMLU — slightly better than Google’s own PaLM 2 and notably better than GPT-3.5 — and 47.9% for MMMU.

Try it yourself

Really, the best way to compare and contrast the usefulness of Gemini versus GPT-4 is to try each model out for yourself.

As we’ve said, Gemini is a chatbot. For GPT-4, you’ll be able to use that model for free via Bing Chat. While both services accept prompts with text and a single image, only Bing Chat is able to generate images as of right now, though it uses a separate DALL-E integration to do so. For as amazing as that demo video was, Bard won’t be able to play Rock, Paper, Scissors with you today or in the near term. It’s still early days yet for Gemini.

Why is Google introducing Gemini now?

All this hubbub around Gemini comes shortly after Google launched PaLM 2 at the I/O conference in May. PaLM only went public the year before, and its own roots trace back through the development of the Language Model for Dialogue Applications (LaMDA) which Google announced at I/O 2021.

“All of this to say that the development of generative AI remains relatively unstable at Google today when compared to the newfound stability at OpenAI.”

For the past several years, Mountain View has struggled to respond to the excitement around OpenAI, GPT, and the potential threats that AI-powered chat services presented its core web search business. With deliberative perfection and the capacity to handle an entire internet’s worth of information, users would be able to get the information they need with a single question on a single webpage, making it easier and quicker than a trip through the Google results – an especially mournful thought when you consider all the eyeballs that wouldn’t be looking at those preferred listings at the top of the pile for which clients pay big bucks.

At the same time, trouble brewed at Google’s DeepMind and former Brain divisions. Dr. Timnit Gebru, one of a tiny class of Black women in the field of artificial intelligence research, claimed she was fired from the company for essentially refusing to back down from a paper she sought to publish about the environmental and societal risks posed by massive LLMs (via MIT Technology Review). In addition to controversies over research ethics, there have been underlying concerns about diverse representation – both in staff and in the data used to train AI models.

Code red

After OpenAI launched ChatGPT in late 2022, The New York Times reported from internal sources that Google was operating under a “code red.” Google then turned over large portions of its existing labor force, replacing people working on various sidecars and even in some of its major businesses like the Android operating system in order to double down on AI hires. Google co-founder Sergey Brin was even brought back into the fold (via Android Police) after leaving in December 2019 to help with the effort.

The development of generative AI remains relatively unstable at Google today when compared to the newfound stability at OpenAI – especially as its CEO, Sam Altman, has just countered a coup from the board of directors, cementing his power over the organization. The layoffs and hirings continue to rack up. Stay tuned.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel, Adjustable I/O & Fully Ventilated Airflow, Black (MCB-Q300L-KANN-S00)

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel, 120mm Aura Addressable RGB Fan, Headphone Hanger,360mm Radiator, Gundam Edition

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH Handle

be quiet! Pure Base 500DX ATX Mid Tower PC case | ARGB | 3 Pre-Installed Pure Wings 2 Fans | Tempered Glass Window | Black | BGW37

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass, aluminum frame, GPU braces, 420mm radiator support and Aura Sync